INTRODUCTION

Often a need arises to migrate the data from one System to another system. These Persistent Data Systems, Source and Destination, could be entirely different, from different vendors.

It could be due to change in requirements or technology advancements.

Add to it the changes in above tier which is making use of Persistent System.

To make sure that everything works fine on new system, you may plan to start executing small %age of traffic on New System and calibrate\compare the results with Old Stack results.

For proper calibration and find out the differences in result set from Old and New Systems, the task in hand is to synchronize the Data across 2 systems, being stored differently in different systems.

If that’s the case, this article can help you achieve Data Synchronization across Heterogeneous Systems on an ongoing basis.

This article aims to present the concept to seamlessly move the data incrementally from your current data storage system to different data storage system, be it on Premise or on cloud.

TERMS USED

Batch: Means a collection of data records to be moved across

BatchState: Represents the Status of Batch Transfer, whether it is IN_PROGRESS, FAILED, COMPLETED

Metadata: Represents the batch details which will help in detecting the next batch of data to be synchronized

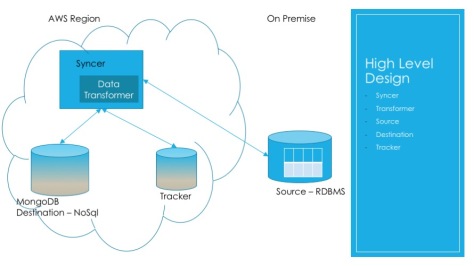

WHICH COMPONENTS ARE INVOLVED?

Data Source : Actual source containing the original data to be synchronized

Data Destination: This is the Persistent System where you want your data to be moved to

Syncer Component: Responsible to detect the incremental changes and synchronize

Transformer Component: Responsible to transform the source data structure into Destination DS. This will be required if you restructure the data.

Tracker System: Responsible to store the status and the details related to last batch of data being sync’ed

Below diagram depicts the Problem statement of Sync’ing the On-Premise RDBMS data to No-SQL, MongoDB Storage System in AWS Cloud.

WHY INCREMENTALLY?

You may have a huge data in your storage system which you cannot move in a single operation. This could be due to resource constraints of memory, network etc which may hinder the data synchronization.

And what if this data is changed frequently by Biz users. Doing Full synchronization each time can prove to be costly.

How can we reduce this cost? How do we increase the chances of successful data synchronization?

How can we make this process resilient and resume from the point where it stopped or failed the last time?

How about splitting up the Data to be synchronized?

How about defining a batch of data, pull up the data of this batch only and then transfer this data batch?

In order to accomplish this, we need to store the details using which we can determine, how much data we have already sync’ed and what is the next batch of data that we need to sync.

HOW IT WORKS?

Before we go further into steps involved, lets understand the batch Metadata.

WHAT COULD BE BATCH METADATA?

{

"batchOffset" : 0,

"batchSize" : 20,

"migrationStartDateTime" : NumberLong(1486026714594),

"migrationEndDateTime" : NumberLong(1486026718245),

"rulesUpdateDateTimeBeginPickedUpForMigration" : null,

"rulesUpdateDateTimeEndPickedUpForMigration" : null,

"status" : "COMPLETED",

"remarks" : "",

"isIncrementalModeOn" : false

}

batchOffset is the marker. Based on the Status, you can compute from where to begin or resume the process. So, if last batch was successfully sync’ed, next batch to be sync’ed starts with batchOffset+batchSize, or, otherwise, batchOffset in case the last batch failed.

batchSize denotes the no of records you want to sync in a single operation and thus it also tells the amount of data.

It shall neither be too small (otherwise resulting in more roundtrips and more processing time) nor too big (otherwise requiring more resources – memory, network bandwidth etc)

status denotes the sync operation Status of the batch

isIncrementalModeOn denotes whether sync process is just pulling up the incremental updates (including additions) or not. This does mean that source data had been completely synchronized once.

rulesUpdateDateTimeBeginPickedUpForMigration and rulesUpdateDateTimeEndPickedUpForMigration denote the time boundaries for incremental updates. These are useful in pulling up the incremental changes during this time period.

migrationStartDateTime and migrationEndDateTime are useful for tracking purposes to determine how much time did this batch sync take.

With this information, let’s see the sequence of events which happen to sync the batch of data.

The process is initiated or resumed with Syncer component.

- Syncer pulls up the last migrated batch details form the Tracker system.

- Using Batch Metadata, it identifies the next batch of data to be synchronized.

It makes an entry into Tracker System to store the next batch metadata with IN_PROGRESS status. - It then builds the query, pulls up the records as per next batch metadata from Source system. You can use any ORM, hibernate or jpa to get the data.

- It then delegates to Transformer to transform the source data structure to destination data structure.

- With transformed data, it identifies the data to be created and data to be updated and accordingly splits the data.

- It then sends data to Destination System.

- Depending upon the operation status, it marks the Batch either as COMPLETED or FAILED status.

And, these sequence of steps go on till there isn’t any more data to sync.

At this point, isIncrementalModeOn is saved as TRUE in the Tracker system and post this, SYNCER System can tweak the query to pull the data records for a time window.

BATCH PROCESS STATE

In case you want to have Primary, Secondary Sync Processes so as to guarantee the High Availability of the Sync Process, we need to maintain and detect the various states of a Sync Process. With this data, we can ensure that at a time, no 2 sync processes are running.

BATCH STATES aka STATUS

Every individual batch of data goes through few states. Below diagram represents the various states, a batch goes through in a syncing process.

THINGS TO KEEP IN MIND

- Idempotency and Duplicacy Prevention:

We are transferring a batch of records. Therefore, it may happen that batch gets Partially Succeeded, meaning that few records got sync’ed and rest failed due to any reasons. In such cases, if you retry posting the data, it may result into same data getting saved twice or more. To prevent this, query what data has to be inserted and what data has to be updated. You can make use of indexes or similar concept.

- Timezone Differences:

Syncer system and Data Source System can be in different timezones or source data may be stored in a specific Timezone. So, if you are pulling up records based on time window, make sure that timezone information is converted into source system before querying.

- Security:

For sensitive data, you can enable SSL/ TLS over transport layer. Also, You may want to have authentication and authorization enabled on both data ends: Source and Destination Storage Systems.

- Hard Deletes:

Soft Deletes like making biz rule inactive or likewise will be taken care by Syncer process. What if tuple is hard deleted from a source storage. For Hard deletes, you may have to use Triggers to catch the deleted tuples.

- Alert Mechanism to detect Stopped Sync Process:

Sync process can also fail due to any reason. Without any alerting mechanism, it may go unnoticed and these Heterogeneous Systems can go out of sync. To prevent such circumstances, log start, stop events into some sinks like Splunk and have Alerts on them.

WHAT QoS PARAMETERS ARE IMPLEMENTED?

- Eventual Consistency

- Guaranteed Sync

- FaultTolerance

- Idempotency

- Also, Updates while Sync are not missed

HOSTING MECHANISM

There can be multiple ways to host a Syncer process. Depending upon the traffic your consuming application takes, you can

- Either host the syncer process under the same application which relies on this data

- Or, host it under a separate process and schedule it using AWS Lambda or AWS Batch

ALTERNATIVES

Amazon DMS also offers the ongoing data migration however it supports only selected Storage Systems. At the time of implementing this, Amazon DMS Offering does not have MSSQL –> MongoDB supported.

If you want to sync data to AWS RDS, Amazon DMS can be used.

Also, if you have huge data ranging in hundreds of TBs and a limited network bandwidth and wants to get this done quickly and only for once, AWS Snowball is another offering you can use.